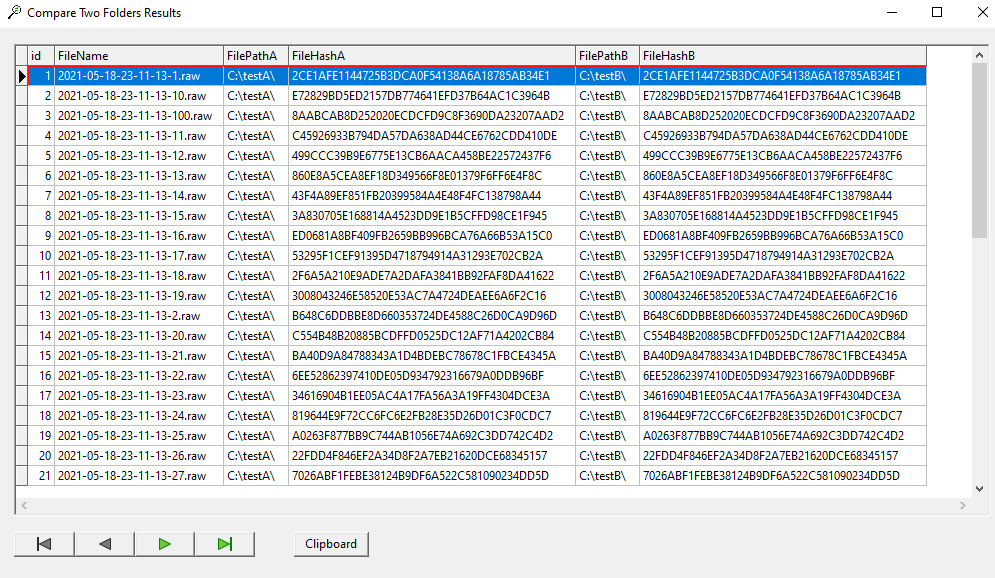

QuickHash GUI v3.3.0 – What is new?

As we celebrate 10 years of QuickHash-GUI, the biggest update of the program for several years is released; v3.3.0.

It has been a monumental amount of work for one hobbyist person, and I am thankful for the couple of hero open-source community members who have helped with parts of this release. I am not going to list the entire CHANGELOG here because there is too much for one blog post but I encourage you to go and read it over on Github. I will however provide a “brief” insight below.

OSX Big Sur

OSX Big Sur presented me with a bit of a headache. Apple removed a lot of the common library files that QuickHash previously looked up and used, like SQLite. So the files no longer exist on the OSX filesystem. Instead it uses a “shared dyld cache”. This meant QuickHash had to have it’s SQLite lookup adjusted. Something I hadn’t realised until some time after the release of the OSX Big Sur when users kept reporting to me that they couldn’t run the tool on OSX.

So with version 3.3.0 of QuickHash, you should now be able to run it on OSX Big Sur. The only caveat is you wont be able to run it on older versions of OSX anymore…at least not v3.3.0. But you can continue to run v3.2.0 or below of QuickHash on those older OSX versions, but obviously then you don’t get the benefits of v3.3.0. Blame Apple.

Improved “Save as…” Performance

Then there is the issue of “big data”. QuickHash is used a lot more than I ever originally anticipated when I first started it 10 years ago. Some people are using it for millions of files, and one user that I know of has even used it for billions of files! With such large file counts comes large demand. And one of those that was identified more recently for a user who was comparing two folders to the tune of 500K files noted that the “Save to CSV” took “a few hours”. When I directed him to the SQLite back-end and suggested using an SQLite Explorer browser extension, he could query that and export it in seconds. This made me realise there must be a significant lag caused by the GUI itself. And on investigation I realised that the order in which I create the display grids was actually repainting the grid for every row that it wrote to an output file. So 500K times, the interface was re-drawing 500K rows! I never realised this. So I have made efforts to rectify that which should enable better writing to CSV and HTML output for large data.

Accessing the SQLite Database

Following on from that, I also felt it would be useful for users to easily be able to make a copy of the SQLite database itself, so there is now a button in several tabs that enables the user to simply copy it to wherever they want, without first having to find it, which will enable them to open it in dedicated database tools such as SQLite Browser (http://sqlitebrowser.org) or one of the many browser extensions\addons.

It does come with a health warning though and that is that the SQLite file is open at the time the copy is done, so it may not contain all the data expected and may cause system instability, potentially. Nevertheless, the option is there and it seems to work on all platforms (for Windows, it uses the Windows API to make the copy whereas for OSX and Linux the standard copy routine works OK) now enables the user to use potentially more flexible database environments for them to explore the data generated by QuickHash itself, if they feel the interface is too limiting or not sufficiently performant.

Environment Checker

And following from that, in the About menu, there are now two extra options. One is an Environment Checker which will tell you the name and path of the SQLite database, the expected libraries and their expected hash values and a few other bits that will likely be added to here in the future.

SQLite Version

The other menu item added is an SQLite version lookup, to quickly report what version is being used by QuickHash-GUI. Useful for debugging purposes etc. This is also reported in the Environment Checker mentioned above, too. Why was this added? Well v3.3.0 had a major rework of SQL statements. But, it turned out after spending weeks getting them right, that some of them only worked with versions of SQLite over v3.25. Versions which are not always shipped with Linux distributions or OSX systems. For example, Linux Mint 19.3 ships with v3.22, OSX Big Sur with version 3.32.3 (or thereabouts), and this new version of QuickHash comes with v3.35 of SQLite. As Linux users are often my focus, and as many of us use Long Term Support (LTS) releases, forcing them to update their operating system to use one data hashing tool seems excessive. So we had to re-write the SQL to accommodate this, and so while I was at it, I thought this new feature would be helpful to help diagnose future bug reports.

“Compare Two Folders” Mis-Alignments for File Count Mismatches

Now this one was a biggie, and I have to thank the open-source SQL ninja volunteer who helped me here. There is, in v3.2.0, an issue of row mis-alignment in the “Compare Two Folders” tab, that was happening when two folders mis-matched due to file count (not by hash mis-match). Let me unpack this one some more.

Only whilst developing v3.3.0, and nearly a year after the release of v3.2.0 where this bug was introduced, I identified a bug with regard to the “Compare Two Folders” tab. Many users do not need this functionality, and those that do typically use it on two folders they suspect do match by file count and file hash anyway, so many will not have found need to identify or report the bug. But it turns out there was an error in my SQL statement that I use to populate to the results grid.

What this means is :

1) It’s fine if FolderA contains the same number of files as FolderB

2) It’s fine if all the hashes of FolderA match the hashes of FolderB

3) If there is mis-match of hashes, but all the file counts match, it correctly reports a mis-match and correctly aligns all the files in the grid.

4) If there is a mis-match of file count, and the hashes therefore also differ by at least one, it reports the mis-match correctly as it should.

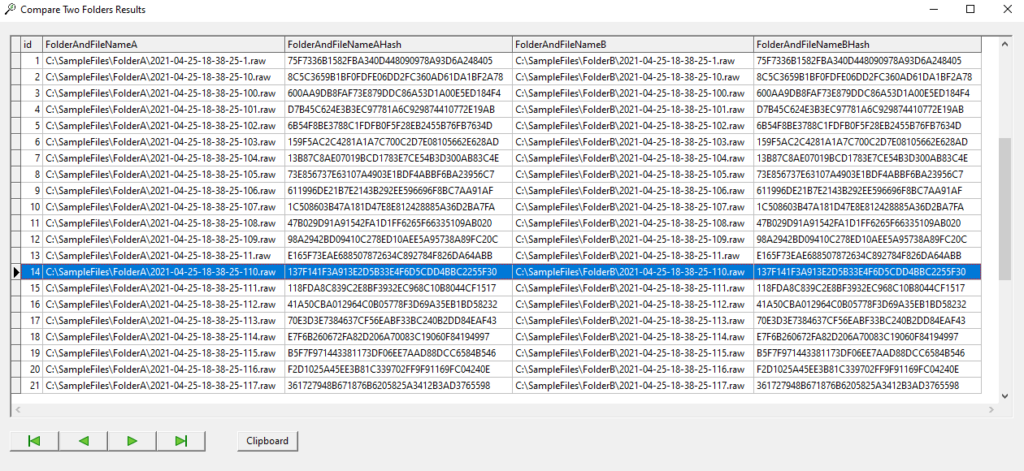

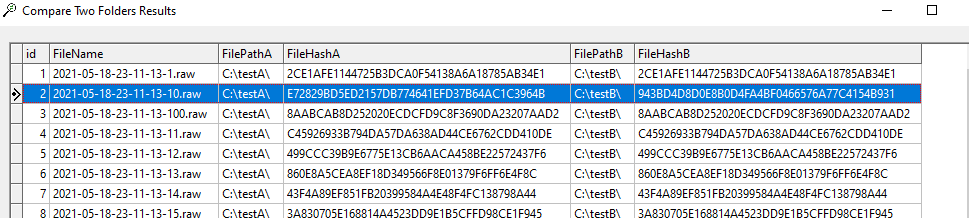

But, if the file count differs, the display grid lists all the files as it should, row by row, UNTIL it gets to the row where a file that was in FolderA was NOT in FolderB, or visa versa. At which point, the rows become mis-aligned. What happens is, the “missing” cell data from the rows used for FolderB data is populated by the UPDATE SQL statement for the next file that was found in FolderB, instead of leaving it blank. And the cell after that is populated with the next one. And so on. So you have this cascading waterfall effect of mis-alignment. See screenshot. So it still does correctly report a mis-match, and all the results are still displayed, but the results are not displayed in line as they should be.

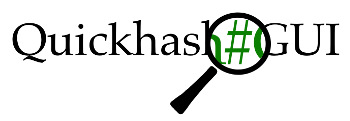

So, if we look at the screenshot above where the two folders do match, we can see file 2021-04-25-18-38-25-110.raw.

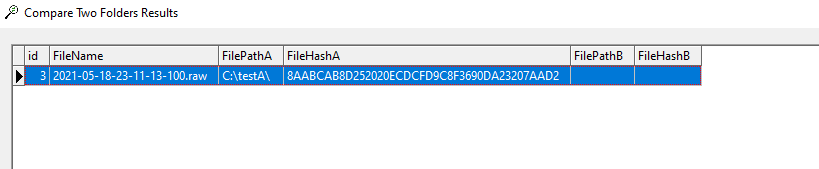

So if I then delete 2021-04-25-18-38-25-110.raw from FolderB, lets see what happens now.

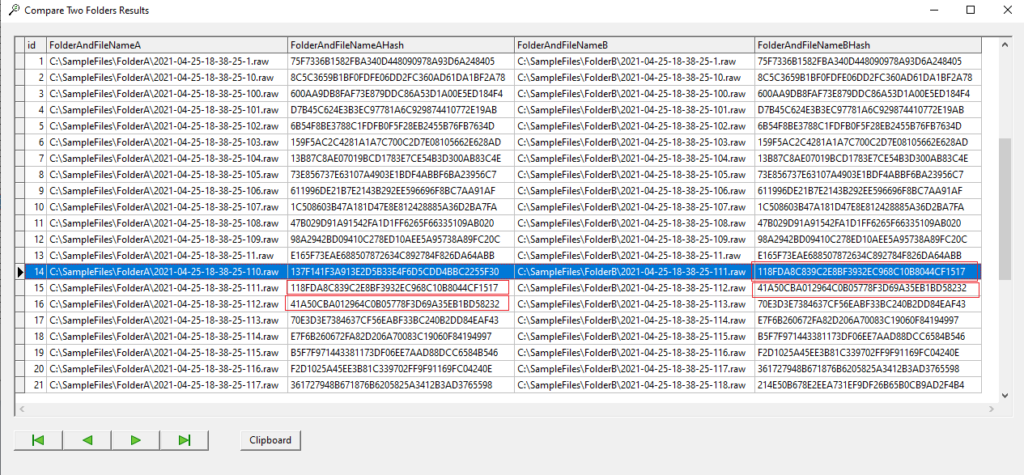

In Folder A we see 2021-04-25-18-38-25-110.raw with hash 137F141F3A913E2D5B33E4F6D5CDD4BBC2255F30 but the row next to it, which should be empty by rights, contains the next file it did find in FolderB which is 2021-04-25-18-38-25-111.raw with hash 118FDA8C839C2E8BF3932EC968C10B8044CF1517. And then you can see on the next row, what we have now is 2021-04-25-18-38-25-111.raw with hash 118FDA8C839C2E8BF3932EC968C10B8044CF1517 and then 2021-04-25-18-38-25-112.raw with hash 41A50CBA012964C0B05778F3D69A35EB1BD58232.

And, in fairness, nothing here is actually incorrect. It is just not listed as I intended it. In fact, I even entered a code comment for v3.2.0 that I meant to come back to and resolve, but I obviously forgot to do so. It said (and you can see this if you go to the Github v3.2.0 commit):

sqlCOMPARETWOFOLDERS.Close; // UPDATE only works if rows already exist. If count is different need to work out how to insert into Cols 3 and 4

The problem was that if you have 10 files in FolderA and 9 files in FolderB, QuickHash cannot be expected to know when it looks through FolderA which corresponding files are, or are not, in FolderB, until after it has searched them both and populated that grid. Only once it has gone through all the files in FolderB could it do that. But by then, the UPDATE SQL syntax has been executed, and it simply populated the next empty cell data with the next file it found.

So the values are all correct, and the mis-match is reported as it should be, but the way the files are displayed is out of line.

This has now been fixed in v3.3.0. but rather radically. A series of new SQL statements have been introduced, as well as a sleuth of new right click menu options for the display grid. They give the user what I hope is almost every conceivable means to compare to the two folders as best they can. Part of this means the display is slightly different now. It will basically show the filename, the hash of it in FolderA and the hash of it in FolderB. Filenames are now considered in the mix too, whereas they were not before. But eh user can use all kinds of right-click options to see duplicate files, missing files from FolderA, or from FolderB, or both, save options, copy to clipboard options etc. See screenshots below.

Note however that the entire design of this tab is to show a user that FolderA matches FolderB, and where it does not, it is designed to try and help identify in what way they differ as best it can but it expects these differences to be minimal. For example, folks who have migrated data from A to B and assume or expect them to be the same and who want to check by means of hash analysis. It is not designed, by contrast, to be a file manager for people who live their digital lives in chaos and simply want an easy and quick way of working out how terribly out of sync two folders are. If that is your need, there are other tools that are more helpful like Directory Opus.

With this particular part of QuickHash I have about reached my limit, and unless the project onboards some trusted others, I don’t think I can improve it much beyond what it is. I don’t have the time, and probably not the skills. That is a polite way of saying take it or leave it :-)

So, lets look at the new version.

I have two folders containing files of random data made by “Teds Tremendous Data Generator“. They each hold 100 files, and one folder is a copy of the other. As you can see, the results grid looks good with hashes nicely aligned, a matching result etc. This will be the expected display for most users were they have compared two folders they hope to be the same.

When the user right clicks, there is an array of options now. Many of them won’t be much use in this display except for the clipboard and save options, because everything matches.

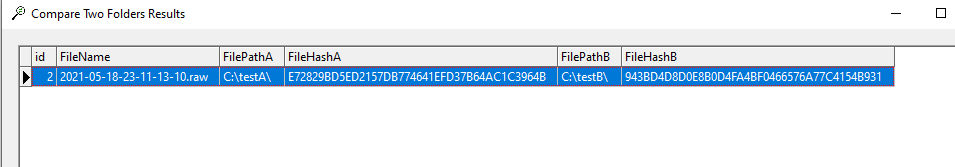

So now I will change a few hex bytes of one file in TestB folder (2021-05-18-23-11-13-10.raw), and delete one file from there also; file 2021-05-18-23-11-13-100.raw. Lets see what happens when I re-run the comparison (I always tend to close QuickHash-GUI and then re-open it because the SQLite tables don’t always seem to get flushed) and apply some right-click options.

You get the idea?

Forensic Disk Images in Expert Witness Format (EWF), aka “E01 Images”

Now this is another huge addition to QuickHash-GUI and has been influenced largely by the increasing number of digital forensics companies and agencies who use the tool. E01 forensic images use the EWF format first introduced in the late 1990’s. It became dominant as a format by the forensic tool EnCase who used it extensively (and still do) and over the last two decades, many other forensic software tools like X-Ways Forensics, FTK Imager etc also use it, amongst other formats.

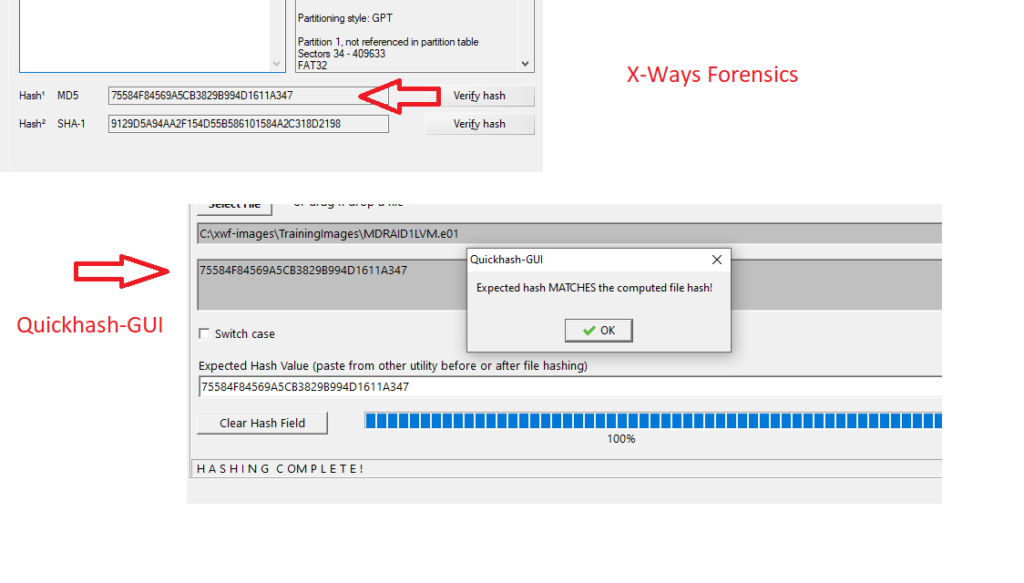

An E01 image is a set of one or more E0X files that store the data of an original digital device and wrap it in segments. Each segment has bits of metadata. When such a file is opened in a forensic tool, it parses all of the image segments and presents the original data for exploration. What it also contains, other than the data, is the computed hash that was calculated at the time of acquisition of the source data, and it is stored in the header.

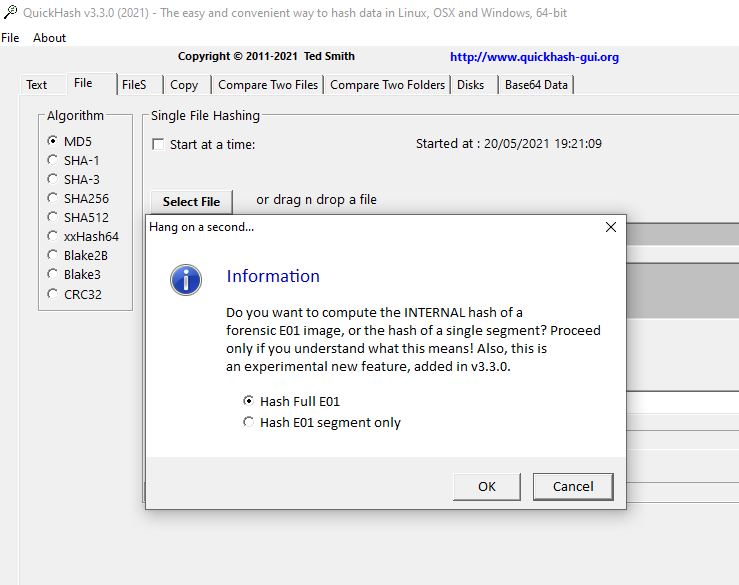

What QuickHash-GUI v3.3.0 can now do, thanks to the C libewf library by Joachim Metz, is open an E01 image segment via the ‘File’ tab, and it will now give the choice : Hash the actual forensic image in its entirety which is the default assumption, or the user can select to literally hash the image segment as all version of QuickHash have done prior to v3.3.0. to perhaps diagnose a fault with such a forensic image set.

The functionality is available for the Windows (both 32 and 64-bit) version and the Linux version (64-bit only) by the inclusion of DLL’s and SO files, both of which have been compiled by me from source. And these are included with the v3.3.0 download in a subfolder called” libs”. Helpfully they are also commited to Github.

This is a really, really, big addition to the tool, and I hope it helps encourage Quickhash-GUI into some digital forensic workflows here and there, perhaps as a secondary dual tool option.

And so if the user chooses to hash the actual E01, as an image, instead of just the file segment, it will do so. But the choice is still there for the user to hash the segment itself, just as has always been possible with QuickHash GUI. And as can be seen by the screenshot below, the results match world class commercial tools, allbeit it does not match their speeds! What about OSX? Sorry – no dice. I haven’t been able to work out how to compile and include the library for OSX Big Sur due to the adjustment of how static libraries are not represented by OSX.

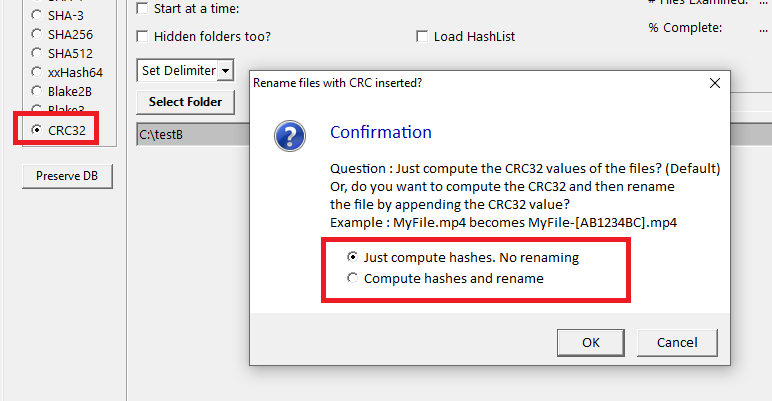

CRC32

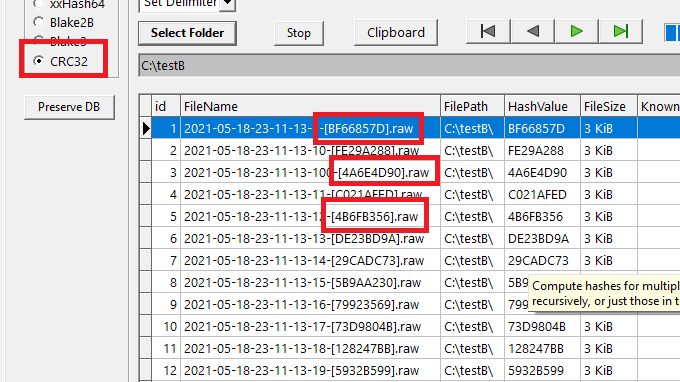

The eagle eyed amongst you will notice the addition of CRC32 checksum. Not a hash algorithm strictly speaking, but a checksum still in wide use today, especially by those who deal with movie\video\sound files a lot, I gather. It is commonplace for such files to be checked using CRC32 for speed purposes, and often the value is placed into the filename.

So by popular demand, I have at last got round to implementing this too for all areas, except for disk hashing, because that makes no sense to do that.

In addition, not only can one compute the CRC32 checksums using QuickHash-GUI but they can also optionally embed the computed checksum into the filename. For example, MyFile.mp4 becomes MyFile-[AB123456].mp4. The user is prompted what action is to be taken on selection of the folder, but only if CRC32 is selected and only via the FileS tab. It won’t do it for the other tabs. So if you have a folder full of mp4 files that you a) want to compute the CRC32 checksums for and b) wish to have those checksums automatically inserted into their filenames en masse, QuickHash-GUI v3.3.0 will do that for you. Obviously the usual safety warnings apply – backup your data first, and check you can restore it. Do not come complaining that all your filenames are ruined due to QuickHash-GUI. Backup first, test run second on another copy, and then run it once you are sure.

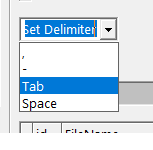

Delimiter Option

Of further note is the addition of a new drop down menu in several tabs. This gives the user the option to choose a delimiter from one of several choices. Comma is the default, and will be used if the user forgets or chooses not to set one, as has always been the case in the program. But a hyphen, Tab, or (heaven forbid) space character can optionally be chosen instead.

Why has this been added? Well for some users the comma presents a challenge, especially those who may have files on disk that actually contain a comma in the filename. What happens is that when they save the data as a CSV file, the comma in the filename confuses the importing program into thinking it is a delimiter, so the columns get thrown out of whack. Tab is much neater and not typically found in filenames. Hyphen and space were thrown just for super flexibility.

A final mention is in respect of architecture.

Windows users make up the majority of the user base, and creating 32 and 64 bit versions is not too tricky. I did cease distribution of x86 binaries with v3.2.0, but there was still a steady stream of folks asking if it would be possible to get them an x86 version. So, this time, I’ve just done both. The download includes a binary that will work on both architectures, along with suitably compiled DLLs. For Linux, it is not impossible either, but it is a bit more of a fiddle. I have not included x86 for Linux but I will assess demand and may go to the effort if it is merited. And for OSX, well there is simply no point. Apple themselves have been driving 64-bit adoption (forcing it even) for the last few years.

If you have read this far, then I salute you! I could go on. The change list is enormous as I said at the start. But, while I still have you, let me explain that when I first started with Quickhash back in 2011, life was different. I started it because it was interesting for me to develop Linux tools and it gave me something to concentrate my effort on. A decade on, with approaching 1 million downloads, and a regular feed of questions, feature requests, and bug reports, but a family life that has grown beyond just me and the wife and other caring needs, I find it harder to find the time to implement many of the changes users ask of me. These last few months I have been working on this project most evenings and weekends. Those of you in the business might call it a “surge”, but a one man surge instead of a team one! I can’t easily keep pace with the rate at which people use this product anymore. It gets downloaded about several hundred times a day and is used in all circles of the world from academia, private industry, government, military, hobbyists, and everyone in between. So if you are a compiled language developer with some experience and are familiar with object-orientated variations of Pascal languages like Delphi and Freepascal (or if you’re one of those of talented folks who can code in half a dozen languages!), and you have experience with Github (branches, merging, etc), and you are able to make cross-platform programming decisions instead of writing code that only works on one OS, and you want to join the project, I’d be glad to consider you and have you help me out. Contact me if interested.

The Github account can be found at https://github.com/tedsmith/quickhash

And anyone wishing to donate to the project to help fund the website costs etc, you can do so as usual over at https://www.paypal.com/paypalme/quickhashgui

On that concluding note, I am off for a rest while you hop over to presumably download the latest edition of QuickHash-GUI for your preferred operating system. If you don’t hear from me in a while, its because I fell asleep for 10 days!

Hi,

new QHgui user here.

I only use hashing to verify that big disk images are copied without corruption to a new place.

For that it would be awesome, if there would be a clock that shows how long hashing will take!

Is there a comparison of those 9 hashes efficiency?

Which is the fastest for hashing eg. 6GB iso image?

Hi…sorry for the late note. I didn’t notice your comment (didn’t get a notification for some reason).

“if there would be a clock that shows how long hashing will take!” – you mean before it starts, based on its size? Not really possible, because your disk and CPU will impact how quickly it can read it, and what your computer is asked to do at the same time during the process will also impact it. No direct comparison that I know of, but, for raw speed, xxHash or CRC32 will be the fastest. But it is rare for ISO distributors to use those hash algorithms on their website for you to compare against. It is usually SHA-1, SHA-256, or MD5. Of those three, MD5 is the fastest.